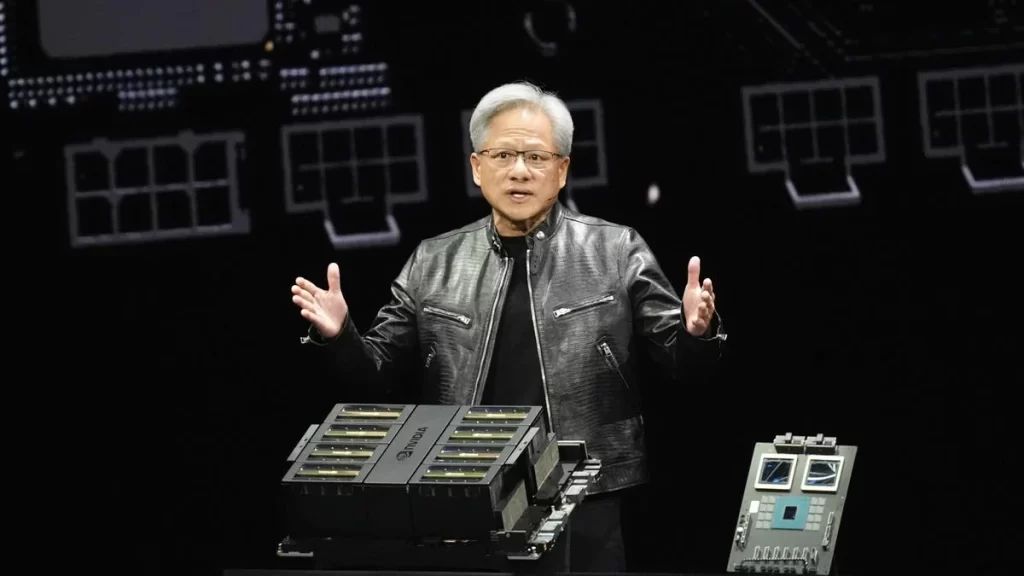

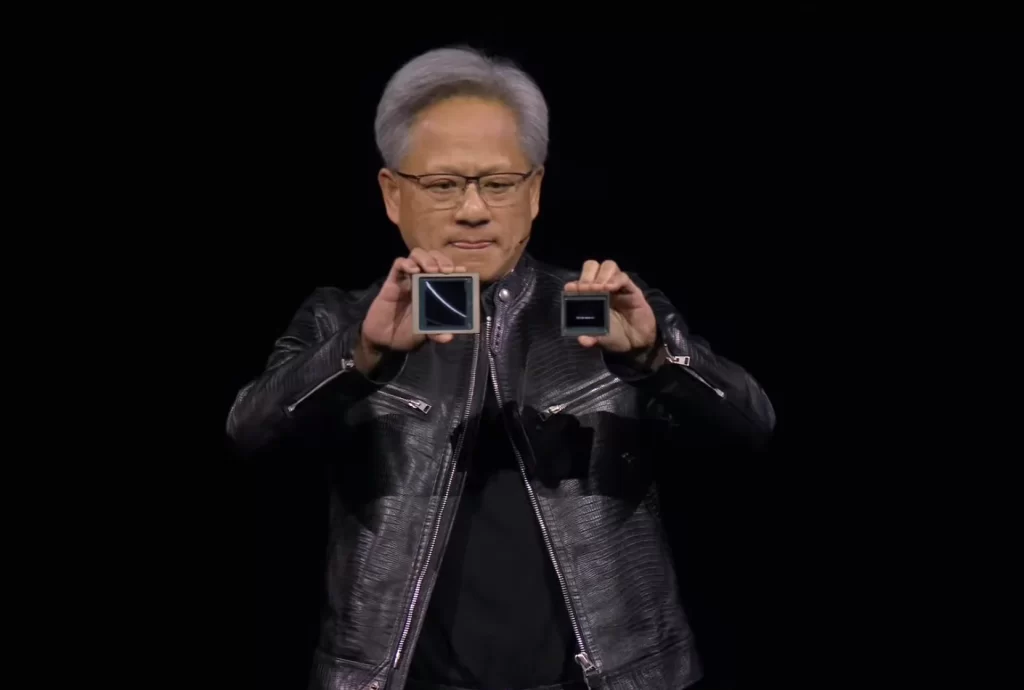

Nvidia launched the Blackwell B200 GPU at the annual GPU Technology Conference in San Jose, California. This new-generation AI chip is named after mathematician Dr David Harold Blackwell and is expected to revolutionize various markets with its unmatched capabilities. The inclusion of Nvidia’s Blackwell platform marks the dawn of a new era in computing, emphasizing the importance of AI-driven technology in today’s digital world.

In his article, we aim to provide a thorough understanding of the Nvidia Blackwell GPU B200 AI technical abilities and market advantages, as well as its potential in the field of AI technology. We will explore its innovative features, its impact on AI and computing, and the growing ecosystem it supports. By the end of this article, you should have a clear understanding of the potential of the Blackwell GPU.

Features of the Nvidia Blackwell GPU B200 AI

Nvidia’s Blackwell B200 GPU is a technological marvel designed to supercharge AI applications. Here’s a breakdown of its standout features:

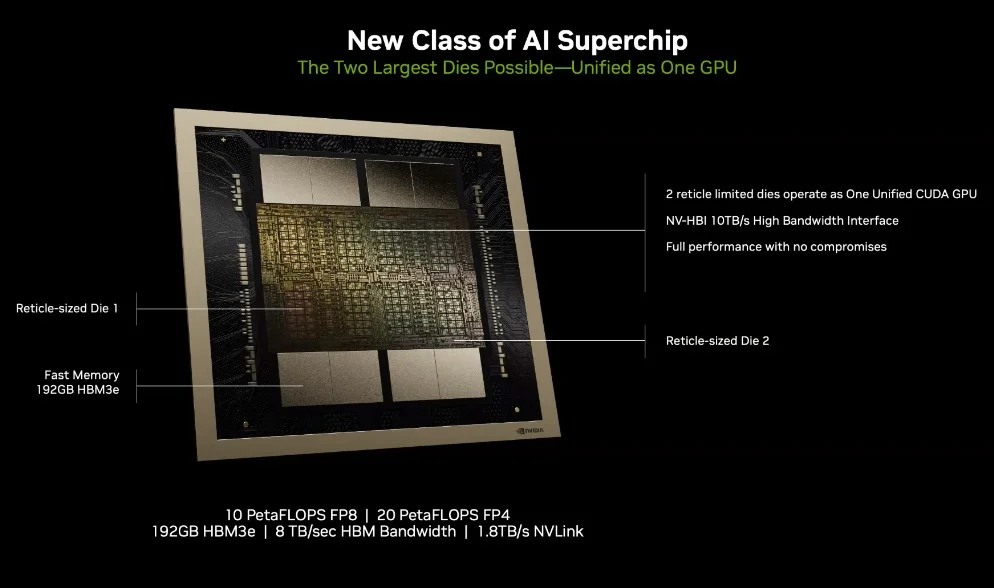

- Unprecedented Performance: With 208 billion transistors, this chip delivers up to 20 petaflops of FP4 horsepower. It’s equipped with 192GB of HBM3e memory and boasts an 8 TB/s bandwidth, making it a powerhouse for AI computations.

- Innovative Design: The B200 utilizes a dual-die configuration connected via a 10 TB/s NV-HBI link, a design choice driven by TSMC’s 4NP process node. This setup facilitates efficient data processing and heat management, requiring 2 litres of fluid per second for cooling.

- AI-Specific Enhancements: At its core, the B200 features a second-gen transformer engine and a next-gen NVLink switch, allowing 576 GPUs to communicate seamlessly. This setup doubles the compute, bandwidth, and model size capabilities, significantly speeding up AI training and inference tasks.

The Blackwell with plate number 1 comes with features that make it a vital tool for advancing AI technologies. It offers unparalleled computational power and efficiency, making it an excellent choice for those looking to improve their AI capabilities.

Impact on AI and Computing

Nvidia’s Blackwell GPU B200 AI marks a giant leap in AI and computing, driven by efficiency and power. Here’s how:

Cost and Energy Efficiency:

- Reduces cost and energy consumption by up to 25x compared to the H100.

- Enables the operation of trillion-parameter-scale AI models with significant savings.

Performance and Scalability:

- Supports the development of real-time generative AI on large language models (LLMs), boosting performance while slashing energy use.

- The NVIDIA GB200 NVL72 system showcases 720 petaflops of AI training and 1.4 exaflops of AI inference performance, illustrating unprecedented scalability.

Innovation and Application:

- Facilitates breakthroughs across various fields, including data processing, engineering simulation, and quantum computing.

- It’s a next-gen AI supercomputer, offering 11.5 exaflops of AI supercomputing power.

The Ecosystem Around Blackwell B200

The ecosystem surrounding Nvidia’s Blackwell B200 GPU is vast and rapidly expanding, encompassing a wide range of industry giants and innovative technologies. Critical components of this ecosystem include:

- Major Cloud Providers and AI Companies:

Every primary cloud provider, server maker, and leading AI company is adopting the Blackwell platform, with names like Amazon Web Services, Google, Microsoft, and Oracle leading the charge. These entities are integrating Blackwell into their AI and cloud computing offerings, underscoring its widespread acceptance and potential.

- Hardware Configuration and Performance:

The GB200 NVL72 system, a cornerstone of the Blackwell ecosystem, showcases remarkable specs. Each of the 18 1U servers within a system houses two GB200 Superchips and a single Grace CPU, delivering a combined 80 petaflops of FP4 AI inference and 40 petaflops of FP8 AI training performance. This setup, supported by 14.4 TB/s of total bandwidth and advanced in-network compute capabilities, exemplifies the platform’s power and efficiency.

- Scalability and Networking:

Scalability is a hallmark of the Blackwell ecosystem, with the ability to house up to eight GB200 NVL72 systems within a single SuperPOD. This configuration offers an astounding 240TB of fast memory and 11.5 exaflops of FP4 computing, demonstrating the platform’s capacity for handling massive AI workloads. Additionally, the integration with NVIDIA’s Quantum-X800 InfiniBand and Spectrum-X800 Ethernet platforms facilitates advanced networking capabilities, ensuring seamless communication and data transfer across the ecosystem.

This ecosystem’s architecture not only highlights Nvidia’s commitment to pushing the boundaries of AI and computing but also sets a new standard for performance, efficiency, and scalability in the industry.

Future Implications and Potential Developments

The future implications and potential developments surrounding Nvidia’s Blackwell GPU B200 AI and its ecosystem are vast, offering a glimpse into a transformative era in computing and AI technologies. Here’s a simplified breakdown:

Performance Leap with GB200:

- Combining two B200 GPUs with a single Grace CPU, the GB200 super chip is set to deliver 30 times the performance for large language model (LLM) inference workloads. This monumental leap signifies a new benchmark in AI capabilities, enabling more complex and real-time AI applications.

Market Competition and Innovation:

- Despite Nvidia’s groundbreaking advancements, competition remains fierce, with rivals like Intel and AMD introducing competitive products. This rivalry is not just a challenge but a catalyst for innovation, pushing the boundaries of what’s possible in AI and computing technologies.

Gaming and Supercomputing:

- The anticipated use of Blackwell GPU architecture in the upcoming RTX 5000 Series, especially the flagship RTX 5090, hints at significant enhancements in gaming experiences. Meanwhile, the DGX SuperPOD, powered by GB200 Grace Blackwell Superchips, showcases the potential for AI supercomputing with its 11.5 exaflops of AI supercomputing power and intelligent predictive-management capabilities, ensuring an uninterrupted AI infrastructure.

These developments not only underscore Nvidia’s leadership in AI and computing but also highlight the evolving landscape of technology, where innovation, competition, and collaboration pave the way for future breakthroughs.

Conclusion

Through the unveiling of Nvidia’s Blackwell B200 GPU, we’ve witnessed the potential for transformative change in the realms of AI and computing, emphasizing the importance of cutting-edge technology in shaping the future. Learn more about how AI can impact our daily lives.

The Blackwell B200 GPU’s revolutionary features not only redefine performance standards but also promise significant strides in cost and energy efficiency, underlining Nvidia’s role in propelling AI technologies forward. This discussion has threaded the needle through the revolutionary attributes of the Blackwell B200, its substantial impact on AI and computing landscapes, and the expanding ecosystem it nurtures, making it clear why this innovation is a game-changer in its domain.

Looking ahead, the implications and potential progressions tied to Nvidia’s Blackwell GPU B200 and its ecosystem signal an exciting era of advancements in computing and AI technologies. With the promise of unprecedented performance, alongside the prospects of fostering competition and innovation within the market, it’s clear that the journey of discovery and improvement is far from over.

As we continue to delve deeper into the capabilities of AI and computing, the Blackwell B200 stands as a beacon of potential, guiding us toward a future where technology’s limits are continuously challenged and redefined, ensuring that the narrative of progress is ongoing and ever-evolving.

FAQs

What exactly is the Blackwell chip from Nvidia?

The Blackwell chip is a powerful processing unit that consists of two large GPU chips, each containing 104 billion transistors. These chips are connected side by side with NVLink 5.0 interconnects, which act like a zipper to bind the chips together seamlessly.

How are Nvidia’s AI chips utilized?

Nvidia’s AI chips are designed for a wide range of applications, catering to data scientists, application developers, and software infrastructure engineers. They are handy in fields such as computer vision, speech recognition, natural language processing (NLP), generative AI, recommender systems, and various other AI-driven technologies.

What makes Nvidia GPUs essential for AI?

Nvidia GPUs are critical for AI because they offer the necessary features, performance, and efficiency that are vital for machine learning. These GPUs are often likened to rare Earth metals or gold in the context of AI, underscoring their fundamental role in the development of generative AI.

What is Nvidia’s AI operating system?

Nvidia’s AI operating system is known as NVIDIA AI Enterprise. It is a comprehensive, cloud-native software suite that enhances data science workflows and simplifies the process of developing and deploying AI applications at an enterprise level, including those involving generative AI.